Implementing Picture-in-Picture with Wowza and WebRTC

Almost every application with video-streaming allows viewers to communicate with the broadcaster using a chat. But a chat isn’t the best solution for some cases — for example, if the broadcaster does not just answer questions, but has a real, bilateral talk with the viewer. So, in our project we decided to add video calls to our app.

Let us say a few words about the project. In our app, all control over broadcast is managed through the web interface, we use action camera or browser (WebRTC) for streaming and Wowza (streaming media server software) — for distributing video. For convenience, let’s also divide all the users of the app into two categories: broadcasters (streamers), people who conduct streams, and viewers.

We wanted to make the Video Calls feature so that viewers could see and hear both video and audio streams — one from the caller and the other from the broadcaster. It was to look like the picture in picture — TV feature when a video of one channel overlays another (used to watch one program while waiting for another to start, or advertisements to finish, for example). In our case, the broadcaster’s video was to be overlaid with the caller’s one.

So, we had to implement such video calls. Let us mark out the requirements:

- Viewers see and hear both the caller and the broadcaster.

- The broadcaster has full control over the call: he is able to end it at any moment or temporarily turn off the caller’s video or/and audio stream.

- Before the beginning of the call, the broadcaster has a preview of the call.

- The delay of the call is minimized.

Let us divide the task into two parts: to organize a video call and to inject the second audio and video streams into the broadcast.

Organizing video call

The general problem in this part is the delay. As Point 4 states, we are to do it as small as possible, so we are unable to use action camera in this scenario, because it gives us a 15-20-second delay. Therefore, only browser streaming remains — and because it already uses WebRTC (the collection of protocols for real-time communications), we decide to use it for organizing calls. It is not very difficult to get to the point when a video stream from one browser is seen inside of another, but a detailed explanation of the code is going to take some time. If you are interested in this, you’d better check this article.

Injecting stream

Server-side solution

At first, we come up with the idea of joining video streams using server software. So, we need to transmit somehow both streams to the server and write an application to mix them. After experimenting, we find that FFmpeg console app is capable of receiving multiple RTMP-streams and joining them into another one.

Merging two videos into one:

ffmpeg -i bottom.avi -i top.avi \

-filter_complex "[1:v] scale=iw/4:-1[tmp]; [0:v][tmp] overlay=0:0" \

-c:v libx264 -b:v 800000 combined_video.avi

(Video files have to be of the same resolution. At first, we scale the second file with coefficient 1/4 [1:v] scale=iw/4:-1 and name the result as tmp. Then streams from the first file [0:v] and the previous result [tmp] are merged overlay=0:0. Where 0:0 means zero padding from the left top corner.

To use real-time streams we just need to replace file names with URLs and add option -re (read input at native frame rate) before every -i:

ffmpeg -re -i rtmp://hostname:1935/stream1 -re -i rtmp://hostname:1935/stream2 \

-filter_complex "[1:v] scale=iw/4:-1[tmp]; [0:v][tmp] overlay=0:0" \

-c:v libx264 -b:v 800000 rtmp://hostname:1935/stream-out

However, such console commands can’t be used in production as we have to restart FFmpeg each time when we need to turn on/off the second video or audio stream. Restarting may lead to broadcast drops resulting in disappearing and repeating of some parts of the broadcast; because when restarting we can lose some data or reload already streamed one from the cache. Such problems can be solved by writing a special server using library version of FFmpeg.

This solution still has another problem, the synchronization one. As we remember, both of the inputs are parts of one conversation, so streams must be merged synchronously. If we do not do this, the viewers will hear people talking simultaneously or even the caller’s question after the broadcaster has answered it. To merge properly, the server needs to know the delay between the call participants for each video chunk, but after packing to RTMP by Wowza such information is lost. Therefore, we decide to look for another solution.

In-browser solution

Let us imagine, that merging happens on the broadcaster’s PC before the transmission to the media server. In this case, the problem with the synchronization disappears (the broadcaster will see and hear the same stream he sends to Wowza). In addition, it is much easier to implement broadcaster’s controls (e. g., turning off caller’s video stream, mute his audio, etc.). So, we start to look for a browser solution of our task.

First of all, we need to find out the way to merge two video streams into one. We find the ability to draw the content of a video tag on html5 canvas to be very useful in this case. Using it to overlay two videos is not very difficult, we just need to draw two videos on the canvas for each frame:

To remove the caller’s video from the stream temporarily, we only need to stop drawing the second video. Now, it is easy to get WebRTC stream from canvas using captureStream method:

let stream = canvas.captureStream(30); // 30 FPS

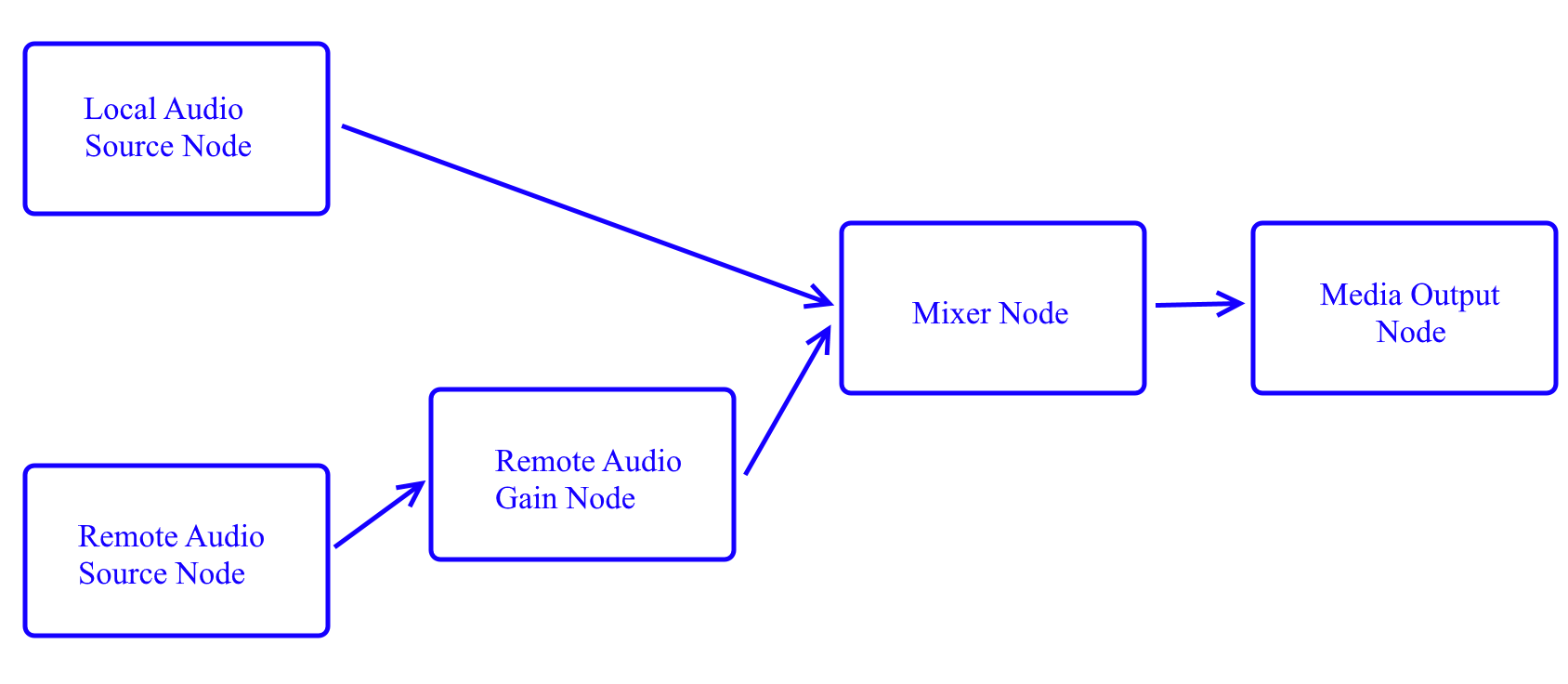

This stream is not ready to be sent to the server — there is no sound in it. It is the consequence of getting it from canvas which is not developed for sound at all. The WebAudio is suitable for merging audio streams. It allows us to create “nodes” — producers or processors of audio streams and to connect them. Schematically, we use it this way:

Here we start with transforming WebRTC stream into audio nodes (LocalAudioSourceNode and LocalAudioSourceNode from local sound and remote sound respectively), then we merge them in MixerNode. We need to control volume of the caller separately, so we add another node between the remote source and the mixer. And at the end, we transform the result into format, supported by WebRTC methods using MediaOutputNode.

...

// Initialization

let ctxAudio = new AudioContext(); // Create audio context to create

// nodes

let mixerNode = ctxAudio.createGain(); // Create mixer node

let remoteAudioGainNode = ctxAudio.createGain(); // Create node to

// control volume of remote stream

remoteAudioGainNode.gain.value = 1.0; // 1.0 - max volume, 0.0 - muted

remoteAudioGainNode.connect(mixerNode); // Connect it to mixer

let mediaOutputNode = ctxAudio.createMediaStreamDestination(); // Node

// for WebRTC

mixerNode.gain.value = 1.0; // Set primary value to max

mixerNode.connect(mediaOutputNode); // Connect mixer to output node

...

// Creating local source node when available

let localAudioSourceNode = ctxAudio.createMediaStreamSource(localStream); // Where

// localStream contains local audio and video (it can be obtained using

// getUserMedia method)

localAudioSourceNode.connect(mixerNode); // Connect it to mixer

streamToSend.addTrack(mediaOutputNode.stream.getAudioTracks()[0]);

// Add resulting

// audio to stream we will send to Server

...

// Create remote source node when available

let remoteAudioSourceNode = ctxAudio.createMediaStreamSource(remoteStream); // Where

// remoteStream contains remote audio and video

remoteAudioSourceNode.connect(remoteAudioGainNode); // Connect it to

// corresponding gain node

...

This is the minimal code you need to get merged sound inside of streamToSend. We connect exactly this stream with the video tag shown to the broadcaster as a stream preview, and it is transmitted to the server. As a result, the broadcaster will see the real stream preview and will be able to control it without any delay. If we go back to point 3, we notice that we need to show the preview before adding it to the real stream. It can be done easily by connecting the stream received from WebRTC remoteStream to the video tag, placed over the main preview. Muting the caller is a question of a couple of code lines:

To mute:

remoteAudioGainNode.gain.value = 0.0;

To unmute:

remoteAudioGainNode.gain.value = 1.0;

So, the main goal is achieved. This solution is much cleaner because of making all merging on the client side inside of a browser, but this is also its main problem — it depends on users’ hardware. However, the tests have shown that even mobile version of Chrome is capable of merging videos this way, and one PC can be used as the caller and the broadcaster at the same time without any problem. Below, you can see an example of a video and audio merging. We use generated video streams, but there will be no difference in the main function if you use real WebRTC streams.